返回大数据案例首页

《大数据课程实验案例:网站用户行为分析----步骤二:Hive数据分析》

开发团队:厦门大学数据库实验室 联系人:林子雨老师 ziyulin@xmu.edu.cn

版权声明:版权归厦门大学数据库实验室所有,请勿用于商业用途;未经授权,其他网站请勿转载

本文介绍大数据课程实验案例“网站用户行为分析”的第二个步骤,Hive数据分析。在实践本步骤之前,请先完成该实验案例的第一个步骤大数据案例——本地数据集上传到数据仓库Hive。这里假设你已经完成了前面的第一个步骤。

所需知识储备

数据仓库Hive概念及其基本原理、SQL语句、数据库查询分析

训练技能

数据仓库Hive基本操作、创建数据库和表、使用SQL语句进行查询分析

任务清单

- 启动Hadoop和Hive

- 创建数据库和表

- 简单查询分析

- 查询条数统计分析

- 关键字条件查询分析

- 根据用户行为分析

- 用户实时查询分析

一、操作Hive

请登录Linux系统(本教程统一采用hadoop用户名登录系统),然后,打开一个终端(可以按快捷键Ctrl+Alt+T)。

本教程中,Hadoop的安装目录是“/usr/local/hadoop”,Hive的安装目录是“/usr/local/hive”。

因为需要借助于MySQL保存Hive的元数据,所以,请首先启动MySQL数据库,请在终端中输入下面命令:

service mysql start //可以在Linux的任何目录下执行该命令

由于Hive是基于Hadoop的数据仓库,使用HiveQL语言撰写的查询语句,最终都会被Hive自动解析成MapReduce任务由Hadoop去具体执行,因此,需要启动Hadoop,然后再启动Hive。

请执行下面命令启动Hadoop(如果你已经启动了Hadoop就不用再次启动了):

cd /usr/local/hadoop

./sbin/start-all.sh

然后,执行jps命令看一下当前运行的进程:

jps

如果出现下面这些进程,说明Hadoop启动成功了。

3765 NodeManager

3639 ResourceManager

3800 Jps

3261 DataNode

3134 NameNode

3471 SecondaryNameNode

下面,继续执行下面命令启动进入Hive:

cd /usr/local/hive

./bin/hive //启动Hive

通过上述过程,我们就完成了MySQL、Hadoop和Hive三者的启动。

启动成功以后,就进入了“hive>”命令提示符状态,可以输入类似SQL语句的HiveQL语句。

然后,在“hive>”命令提示符状态下执行下面命令:

hive> use dblab; //使用dblab数据库

hive> show tables; //显示数据库中所有表。

hive> show create table bigdata_user; //查看bigdata_user表的各种属性;

执行结果如下:

OK

CREATE EXTERNAL TABLE `bigdata_user`(

`id` int,

`uid` string,

`item_id` string,

`behavior_type` int,

`item_category` string,

`visit_date` date,

`province` string)

COMMENT 'Welcome to xmu dblab!'

ROW FORMAT SERDE

'org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe'

WITH SERDEPROPERTIES (

'field.delim'='\t',

'serialization.format'='\t')

STORED AS INPUTFORMAT

'org.apache.hadoop.mapred.TextInputFormat'

OUTPUTFORMAT

'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION

'hdfs://localhost:9000/bigdatacase/dataset'

TBLPROPERTIES (

'numFiles'='1',

'totalSize'='15590786',

'transient_lastDdlTime'='1480217306')

Time taken: 0.715 seconds, Fetched: 24 row(s)

可以执行下面命令查看表的简单结构:

hive> desc bigdata_user;

执行结果如下:

OK

id int

uid string

item_id string

behavior_type int

item_category string

visit_date date

province string

Time taken: 0.267 seconds, Fetched: 7 row(s)

二、简单查询分析

先测试一下简单的指令:

hive> select behavior_type from bigdata_user limit 10;//查看前10位用户对商品的行为

执行结果如下:

OK

1

1

1

1

1

4

1

1

1

1

Time taken: 2.561 seconds, Fetched: 10 row(s)

如果要查出每位用户购买商品时的多种信息,输出语句格式为 select 列1,列2,….,列n from 表名;

比如我们现在查询前20位用户购买商品时的时间和商品的种类

hive> select visit_date,item_category from bigdata_user limit 20;

执行结果如下:

OK

2014-12-08 4076

2014-12-12 5503

2014-12-12 5503

2014-12-02 9762

2014-12-12 5232

2014-12-02 9762

2014-12-12 5503

2014-12-12 10894

2014-12-12 6513

2014-12-12 10894

2014-12-12 2825

2014-11-28 2825

2014-12-15 3200

2014-12-03 10576

2014-11-20 10576

2014-12-13 10576

2014-12-08 10576

2014-12-14 7079

2014-12-02 6669

2014-12-12 5232

Time taken: 0.401 seconds, Fetched: 20 row(s)

有时我们在表中查询可以利用嵌套语句,如果列名太复杂可以设置该列的别名,以简化我们操作的难度,以下我们可以举个例子:

hive> select e.bh, e.it from (select behavior_type as bh, item_category as it from bigdata_user) as e limit 20;

执行结果如下:

OK

1 4076

1 5503

1 5503

1 9762

1 5232

4 9762

1 5503

1 10894

1 6513

1 10894

1 2825

1 2825

1 3200

1 10576

1 10576

1 10576

1 10576

1 7079

1 6669

1 5232

Time taken: 0.374 seconds, Fetched: 20 row(s)

这里简单的做个讲解,behavior_type as bh ,item_category as it就是把behavior_type 设置别名 bh ,item_category 设置别名 it,FROM的括号里的内容我们也设置了别名e,这样调用时用e.bh,e.it,可以简化代码。

三、查询条数统计分析

经过简单的查询后我们同样也可以在select后加入更多的条件对表进行查询,下面可以用函数来查找我们想要的内容。

(1)用聚合函数count()计算出表内有多少条行数据

hive> select count(*) from bigdata_user;//用聚合函数count()计算出表内有多少条行数据

执行结果如下:

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Query ID = hadoop_20161127192506_cb00d4a1-b9e3-426e-a2be-d971a61f686b

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Job running in-process (local Hadoop)

2016-11-27 19:25:10,555 Stage-1 map = 0%, reduce = 0%

2016-11-27 19:25:12,606 Stage-1 map = 100%, reduce = 100%

Ended Job = job_local1072810798_0001

MapReduce Jobs Launched:

Stage-Stage-1: HDFS Read: 62395912 HDFS Write: 0 SUCCESS

Total MapReduce CPU Time Spent: 0 msec

OK

300000

Time taken: 6.418 seconds, Fetched: 1 row(s)

我们可以看到,得出的结果为OK下的那个数字300000(因为我们的small_user.csv中包含了300000条记录,导入到Hive中)。

(2)在函数内部加上distinct,查出uid不重复的数据有多少条

下面继续执行操作:

hive> select count(distinct uid) from bigdata_user;//在函数内部加上distinct,查出uid不重复的数据有多少条

执行结果如下:

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Query ID = hadoop_20161127192506_cb00d4a1-b9e3-426e-a2be-d971a61f686b

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Job running in-process (local Hadoop)

2016-11-27 19:25:10,555 Stage-1 map = 0%, reduce = 0%

2016-11-27 19:25:12,606 Stage-1 map = 100%, reduce = 100%

Ended Job = job_local1072810798_0001

MapReduce Jobs Launched:

Stage-Stage-1: HDFS Read: 62395912 HDFS Write: 0 SUCCESS

Total MapReduce CPU Time Spent: 0 msec

OK

300000

Time taken: 6.418 seconds, Fetched: 1 row(s)

hive> select count(distinct uid) from bigdata_user;

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Query ID = hadoop_20161127193437_9e0a95ac-b5f2-44b5-acf8-97c90fc1e8c8

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Job running in-process (local Hadoop)

2016-11-27 19:34:40,045 Stage-1 map = 0%, reduce = 0%

2016-11-27 19:34:42,074 Stage-1 map = 100%, reduce = 100%

Ended Job = job_local2080512810_0002

MapReduce Jobs Launched:

Stage-Stage-1: HDFS Read: 93577484 HDFS Write: 0 SUCCESS

Total MapReduce CPU Time Spent: 0 msec

OK

270

Time taken: 4.689 seconds, Fetched: 1 row(s)

** (3)查询不重复的数据有多少条(为了排除客户刷单情况) **

hive>select count(*) from (select uid,item_id,behavior_type,item_category,visit_date,province from bigdata_user group by uid,item_id,behavior_type,item_category,visit_date,province having count(*)=1)a;

执行结果如下:

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Query ID = hadoop_20161127195152_81d536c5-d492-4b59-a7db-4352717c8a70

Total jobs = 2

Launching Job 1 out of 2

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Job running in-process (local Hadoop)

2016-11-27 19:51:55,380 Stage-1 map = 0%, reduce = 0%

2016-11-27 19:52:03,401 Stage-1 map = 100%, reduce = 0%

2016-11-27 19:52:06,413 Stage-1 map = 100%, reduce = 100%

Ended Job = job_local15333446_0003

Launching Job 2 out of 2

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Job running in-process (local Hadoop)

2016-11-27 19:52:08,283 Stage-2 map = 100%, reduce = 100%

Ended Job = job_local2040099087_0004

MapReduce Jobs Launched:

Stage-Stage-1: HDFS Read: 124759056 HDFS Write: 0 SUCCESS

Stage-Stage-2: HDFS Read: 124759056 HDFS Write: 0 SUCCESS

Total MapReduce CPU Time Spent: 0 msec

OK

284183

Time taken: 16.05 seconds, Fetched: 1 row(s)

可以看出,排除掉重复信息以后,只有284183条记录。

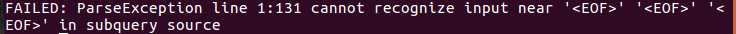

注意:嵌套语句最好取别名,就是上面的a,否则很容易出现如下错误.

四.关键字条件查询分析

1.以关键字的存在区间为条件的查询

使用where可以缩小查询分析的范围和精确度,下面用实例来测试一下。

(1)查询2014年12月10日到2014年12月13日有多少人浏览了商品

hive>select count(*) from bigdata_user where behavior_type='1' and visit_date<'2014-12-13' and visit_date>'2014-12-10';

执行结果如下:

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Query ID = hadoop_20161127200005_fb5120dc-acbb-43f3-97aa-ee766ead98e5

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Job running in-process (local Hadoop)

2016-11-27 20:00:07,362 Stage-1 map = 0%, reduce = 0%

2016-11-27 20:00:08,376 Stage-1 map = 100%, reduce = 100%

Ended Job = job_local1606042318_0005

MapReduce Jobs Launched:

Stage-Stage-1: HDFS Read: 155940628 HDFS Write: 0 SUCCESS

Total MapReduce CPU Time Spent: 0 msec

OK

26329

Time taken: 3.058 seconds, Fetched: 1 row(s)

(2)以月的第n天为统计单位,依次显示第n天网站卖出去的商品的个数

hive> select count(distinct uid), day(visit_date) from bigdata_user where behavior_type='4' group by day(visit_date);

执行结果如下:

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Query ID = hadoop_20161127200220_738d202d-242c-415d-a986-f76faaff791a

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Job running in-process (local Hadoop)

2016-11-27 20:02:22,945 Stage-1 map = 100%, reduce = 100%

Ended Job = job_local727434228_0006

MapReduce Jobs Launched:

Stage-Stage-1: HDFS Read: 187122200 HDFS Write: 0 SUCCESS

Total MapReduce CPU Time Spent: 0 msec

OK

37 1

48 2

42 3

38 4

42 5

33 6

42 7

36 8

34 9

40 10

43 11

98 12

39 13

43 14

42 15

44 16

42 17

66 18

38 19

50 20

33 21

34 22

32 23

47 24

34 25

31 26

30 27

34 28

39 29

38 30

Time taken: 2.378 seconds, Fetched: 30 row(s)

2.关键字赋予给定值为条件,对其他数据进行分析

取给定时间和给定地点,求当天发出到该地点的货物的数量

hive> select count(*) from bigdata_user where province='江西' and visit_date='2014-12-12' and behavior_type='4';

执行结果如下:

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Query ID = hadoop_20161127200520_4cdc4b74-5dbe-48d9-8e39-bdfaff90ff2e

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Job running in-process (local Hadoop)

2016-11-27 20:05:22,874 Stage-1 map = 0%, reduce = 0%

2016-11-27 20:05:23,879 Stage-1 map = 100%, reduce = 100%

Ended Job = job_local1743806040_0007

MapReduce Jobs Launched:

Stage-Stage-1: HDFS Read: 218303772 HDFS Write: 0 SUCCESS

Total MapReduce CPU Time Spent: 0 msec

OK

7

Time taken: 3.421 seconds, Fetched: 1 row(s)

五.根据用户行为分析

从现在开始,我们只给出查询语句,将不再给出执行结果。

1.查询一件商品在某天的购买比例或浏览比例

hive> select count(*) from bigdata_user where visit_date='2014-12-11'and behavior_type='4';//查询有多少用户在2014-12-11购买了商品

hive> select count(*) from bigdata_user where visit_date ='2014-12-11';//查询有多少用户在2014-12-11点击了该店

根据上面语句得到购买数量和点击数量,两个数相除即可得出当天该商品的购买率。

2.查询某个用户在某一天点击网站占该天所有点击行为的比例(点击行为包括浏览,加入购物车,收藏,购买)

hive> select count(*) from bigdata_user where uid=10001082 and visit_date='2014-12-12';//查询用户10001082在2014-12-12点击网站的次数

hive> select count(*) from bigdata_user where visit_date='2014-12-12';//查询所有用户在这一天点击该网站的次数

上面两条语句的结果相除,就得到了要要求的比例。

3.给定购买商品的数量范围,查询某一天在该网站的购买该数量商品的用户id

hive> select uid from bigdata_user where behavior_type='4' and visit_date='2014-12-12' group by uid having count(behavior_type='4')>5;//查询某一天在该网站购买商品超过5次的用户id

六.用户实时查询分析

某个地区的用户当天浏览网站的次数

hive> create table scan(province STRING,scan INT) COMMENT 'This is the search of bigdataday' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' STORED AS TEXTFILE;//创建新的数据表进行存储

hive> insert overwrite table scan select province,count(behavior_type) from bigdata_user where behavior_type='1' group by province;//导入数据

hive> select * from scan;//显示结果

执行结果如下:

上海市 8364

云南 8454

内蒙古 8172

北京市 8258

台湾 8382

吉林 8272

四川 8359

天津市 8478

宁夏 8205

安徽 8205

山东 8236

山西 8503

广东 8228

广西 8358

新疆 8316

江苏 8226

江西 8403

河北 8363

河南 8382

浙江 8310

海南 8391

湖北 8183

湖南 8368

澳门 8264

甘肃 8415

福建 8270

西藏 8347

贵州 8505

辽宁 8292

重庆市 8506

陕西 8379

青海 8427

香港 8386

黑龙江 8309

Time taken: 0.248 seconds, Fetched: 34 row(s)

到这里,Hive数据分析实验顺利结束。可以继续访问《大数据课程实验案例:网站用户行为分析—-步骤三:Hive、MySQL、HBase数据互导》